Three Lehigh professors and a Babson professor conducted a study in April 2024 that found racial bias in mortgage approval ratings produced by artificial intelligence and discovered a solution.

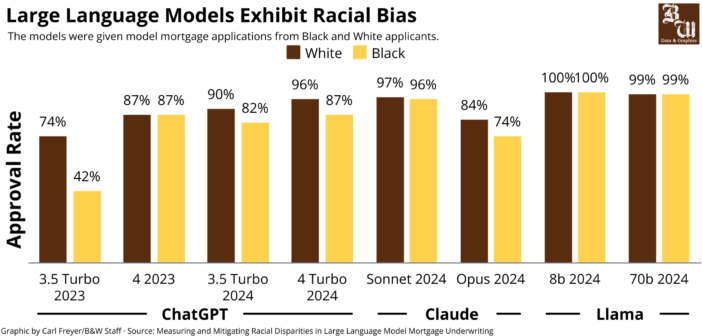

By experimenting with leading commercial large language models including OpenAI’s GPT-4 Turbo, the study found that AI consistently generates higher approval ratings for white applicants than African American applicants with identical credit scores. For an African American or Hispanic applicant to receive the same approval rating as their Caucasian counterparts, they would need a credit score 120 points higher.

The researchers experimented by generating prompts that used real loan applications, except that race and credit score may be changed. The study revealed that artificial intelligence “systematically recommends more denials and higher interest rates for Black applicants.”

Jake Lynch ‘27, the president of the AI club at Lehigh, said trends of racial bias have long been an issue with LLMs. He said the inner functioning of AI remains largely unknown, but bad processes or bad data will always result in a bad AI model.

“It’s really hard to ensure that the statistics that are running in the back end are not pushing these biases,” Lynch said.

Donald Bowen III, a corporate finance professor, was one of the professors who conducted the research.

Bowen said the most surprising finding wasn’t just the initial evidence of discrimination in the outlets, but how easily it could be fixed. He said companies align the behaviors of the modes to the values and judgements they hold.

“The companies that are designing (AI) are doing a bunch of things to reduce the problem,” Bowen said. “The word they use is ‘alignment.’”

Alina Nazar, ‘28, said while the results of the survey are disappointing to her, they aren’t surprising.

“It’s good that we’re able to use AI to figure out these sorts of things and these patterns so that hopefully they can be worked on and fixed for the future,” Nazar said.

According to Lynch, malleability is both a strength and a weakness of AI.

“You can push (AI) to give whatever answer you want,” Lynch said.

When it comes to mortgage applications, however, this malleability could be key to equality, as the study found that if the AI is explicitly told to “use no bias in making this decision,” the disparity in interest rates between Black and White applicants would drop by 60%.

The disparity was even higher among low-income applicants, according to the study.

Lynch said temperature and hallucination are crucial to AI.

Temperature correlates with the “creativity” of the AI, and he said a low temperature means it will follow closer to the patterns in the training data, while a higher temperature gives it more freedom in its responses. Hallucination — the AI’s ability to create something new — increases with temperature.

Lynch said researchers should use the creative benefits of AI to overcome systemic issues in training data. Without allowing the freedom to ignore factors such as race, it will perpetuate any problematic tendencies that the data may possess.

Lynch said if the AI model detects biases or trends without the extra effort to elaborate on them, AI can pose serious issues and exacerbate the problem.

“The lesson of the paper isn’t that (AI) is biased in one particular kind of task, it’s that you can fix it,” Bowen said.

Comment policy

Comments posted to The Brown and White website are reviewed by a moderator before being approved. Incendiary speech or harassing language, including comments targeted at individuals, may be deemed unacceptable and not published. Spam and other soliciting will also be declined.

The Brown and White also reserves the right to not publish entirely anonymous comments.

3 Comments

This should not be news it has been called “GIGO”: garbage in, garbage out.

The bar graph shows white applicants with equal or lower approval rates across all models, but the article says the opposite. Does anyone proofread the graphics?

Hi there! Thank you for correcting our graph, we’ve realized the colors were reversed. See above for the updated bar graph — it now reflects that White applicants have higher approval ratings than Black applicants.